My experience using Lighthouse in the real world

Lighthouse has been part of my daily work for the last few months and I shared some snippets in my last few posts. For this particular post, it is time to share how I am using Lighthouse in a product used by millions of people and what I have discovered during this process.

Disclaimers: 1. This content may be reviewed in the future as I learn more about web performance and Lighthouse; 2. Do not take this post as professional/legal advice; 3. Do not take my comments on tech X or Y as attacks to tech X or Y.

Use existing tools before creating your own

It sounds obvious but it doesn’t hurt to repeat the message: do not reinvent the wheel (until you absolutely need to). You can start monitoring Core Web Vitals for free ↗︎ in the Google Search Console.

If this is not enough, or if you have a complex web app behind authentication, or any other scenarios, you can start by using a tool listed in the Lighthouse integrations docs. From that list, I can only speak of Calibre ↗︎ as I have not used the others. I am not affiliated with them.

Using Calibre, you can schedule Lighthouse tests, create performance budgets, see pretty charts, and learn what performance looks like in your product. The relevant information is easy to find and their product has a very polished UI, however, at a certain point, you may want more and that means ‘custom development’.

The tooling in place

We have decided to create our own tool at Thinkific ↗︎ in order to run Lighthouse tests to be aligned with the monitoring stack and give us more flexibility. Here are some details:

- We run tests every hour;

- We run tests in our Critical User Journeys: these are important routes of our application used by different types of users;

- The report files (JSON, HTML), along with the page screenshot and HAR file are stored in S3 for future reference;

- The numbers (Lighthouse scores, assets file sizes, Web Vitals) are sent to a relational database (Postgres) and Promotheus, a monitoring system and time series database. Postgres empowers the creation of custom reports as we need and Prometheus is used with Grafana to create custom dashboards.

Comparing to open-source solutions, our tool is similar to lighthouse-monitor.

Understanding variability

Running Lighthouse in our tool, we noticed the performance score changed due to inherent variability in web and network technologies, even when there hadn't been a code change.

Network, client hardware, and web server variabilities are some examples of how the score can trick you. Lighthouse documentation clarifies all the different sources of variability and how to deal with them.

In our case, we run Lighthouse 5 times per URL, calculating a median score. We also store the min/max values in case we want to investigate one-off results.

Working with Lighthouse results

What do I do when I have Lighthouse reports from 9 different URLs?

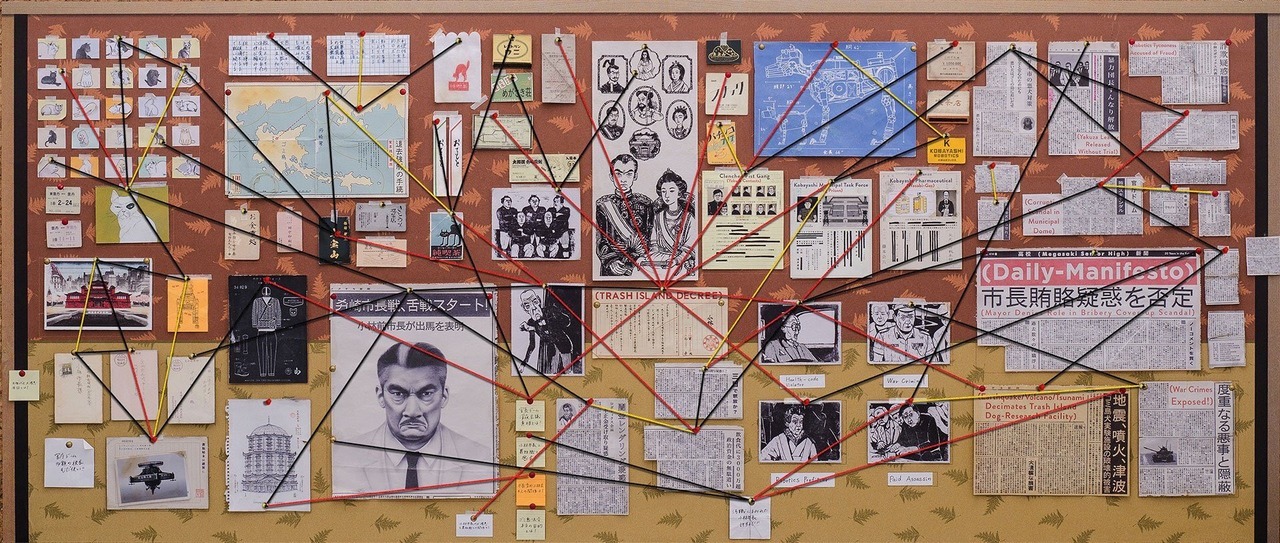

FCP, LCP, TTI, TBT, CLS: my work in the last few months is analyzing data and connecting dots. Sometimes I find low hanging fruit that improves one metric here and there, sometimes I go down the rabbit hole.

With data coming from everywhere, I am following the scientific method to focus on what matters:

- Make an observation.

- Ask a question.

- Form a hypothesis or testable explanation.

- Make a prediction based on the hypothesis.

- Test the prediction.

- Iterate: use the results to make new hypotheses or predictions.

When it comes to performance, there is no silver bullet. Sometimes images are the culprits of bad performance scores, sometimes it is an architecture problem. The goal of my post is not blaming X or Y. In saying this, let me share a few thoughts on these two topics:

Image optimization

Images impact page load time since bigger images will take longer to be downloaded and as a result, it will impact different Lighthouse metrics - usually CLS, LCP.

Recently, Google worked with Next.js to create an Image component ↗︎ that delivers optimized images. The framework supports image conversion from via Imgix, Cloudinary, Akamai and as expected, Vercel.

I predict that the conversion on demand, by using third-party services as mentioned above or by using serverless solutions will become more and more popular. Starting next year, Google will include Web Vitals metrics ↗︎ in the page ranking algorithms.

Old architectures didn't age well

Old SPA architectures doesn't perform well these days and Lighthouse captures that.

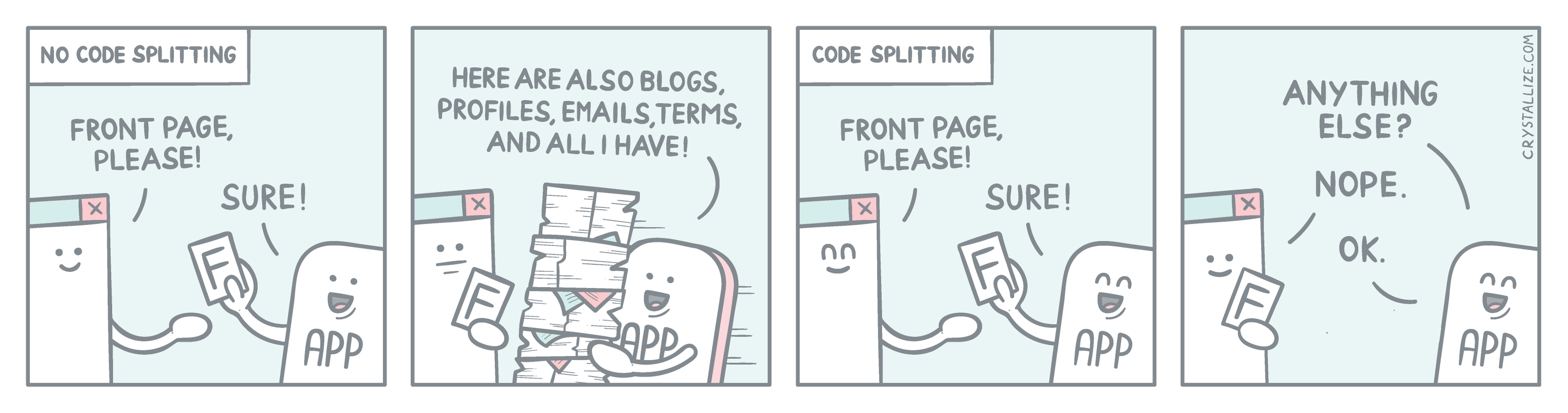

Here is one example: back in the day, people (including myself) used to build their JS code into a single file. We wanted to avoid multiple files because HTTP/1.1 didn't support too many concurrent requests, which was improved in HTTP/2. Today, unused JS will be caught in the Lighthouse tests.

Code Splitting is part of any modern JS tech stack using webpack and, in React, it can be combined with Loadable Components ↗︎ and React.lazy. Giving the user only what they need is key.

In the back end, GraphQL ↗︎ showed us that we can request data as we go. I know this can also be done with REST as long we know what is in the UI but the whole point here is to deliver only the data that users need.

Conclusions

I hope this series shed some light (no pun intended) on your front-end performance skills. Lighthouse is so powerful that people out there are creating full SaaS products to make the web better.

Are you using Lighthouse or planning to start using? Let me know in the comments!